Achieving and Maintaining High

Availability in PostgreSQL

Availability in PostgreSQL

Understanding the Tools, Techniques, and Technologies of PostgreSQL High Availability, and How to Leverage New Innovations to Maximize Database Uptime Performance and Reliability

Being “Always On” with the right database architecture is now more crucial than ever

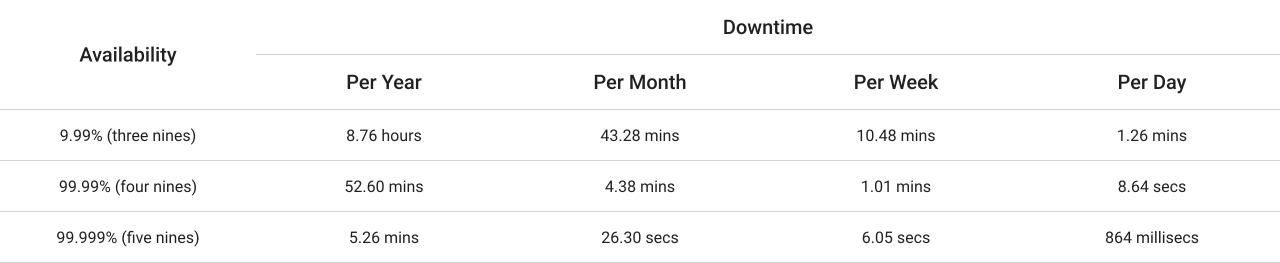

Customer demand is changing, and so is how we perceive High Availability (HA). The initial purpose of HA databases was to protect users from hardware, software, and network failures. But as technology improved and databases grew more reliable, customers and businesses didn’t just need protection – they needed “Always On” capabilities and “three 9s, four 9s, and five 9s” uptimes.

Despite advancements in hardware, network, and database technology, many organizations still risk facing detrimental database failures. A whopping 60% of data operations have experienced an outage in the past three years, with 60% of these outages having productivity disruptions between four to over 48 hours. The cost is significant: 70% of outages result in over $100,000 to over $1 million in total losses.

Ensuring your business can handle failures and recover from outages is crucial, so it’s crucial that businesses consider HA databases and implement an HA architecture that ensures maximum reliability and continuity.

Join us as we dive into achieving high availability, from the techniques and tools available today to the innovations that allow businesses to attain and maintain extreme high availability.

Achieve best-in-class High Availability for PostgreSQL, no matter the setup

Defining High Availability and understanding the “three 9s, four 9s, and five 9s

High availability (HA) is a system characteristic that establishes an agreed level of operational performance, usually uptime, for a higher-than-normal period.

High availability is not to be confused with disaster recovery (DR) and fault tolerance, though some aspects do overlap. Disaster recovery refers to the process of maintaining or reestablishing vital infrastructure and systems following a natural or human-induced disaster. Fault tolerance is a design that enables a system to continue its intended operation (at a reduced level) rather than failing completely, once one part of the system fails.

High availability databases rely on redundancy, which involves having a backup server ready to seamlessly take over and perform a database restore. Downtime, then, becomes a fleeting inconvenience rather than a crippling blow.

How do you calculate or measure high availability? This is usually done by defining and committing to a certain uptime that is part of your availability service level agreement (SLA), which dictates the “three 9s, four 9s, or five 9s” availability percentage corresponding to the amount of time a system would be unavailable.

Know when and what to invest when considering a HA database for your specific business needs

While high availability architecture provides increased protection against data-tier downtime, it’s crucial that businesses first understand what they are getting into.

If your environment tolerates some downtime, high availability architecture might introduce unnecessary costs and complexity. The best option for your business use case depends on your tolerance for downtime and the respective tradeoffs of various other architectures.

Here's how you can decide what high availability option is best for your business.

-

Determine the level of availability you hope to achieve

Do you need a system that allows your business to continue functioning only during a single zone or complete region failure?

-

Understand your operational budget

To provide high availability, your infrastructure and storage costs will increase. It’s best first to determine how much you’re capable of spending.

-

Know the cost to your business if there is a downtime in the data persistence tier

A key question is what services or customers rely on your data tier. If a service caters only to occasional internal users, your system doesn’t need the HA capabilities an end-customer-facing service needs for constant continuity.

-

Understand your RPO (Recovery Point Objective)

Can you afford to lose data due to a failover? The distributed nature of HA topologies means a tradeoff between commit latency and the risk of data loss during a failure.

-

Know Your RTO (Recovery Time Objective)

Multiple HA options vary depending on how quickly the system can failover and be available to users and customers. If you know your RTO, you’ll know the right HA option to get.

Clearly defining your RPO and RTO is crucial, as both concepts go hand-in-hand. Do note that achieving the lowest amount of either tends to be the most expensive endeavor. Trying to find an acceptable balance between both will influence decisions on technology acquired and design decisions.

The “good, fast, cheap: choose two” rule applies here. Depending on your needs, budget, and risk tolerance, your HA architecture will have tradeoffs in performance, capabilities, and cost.

Understanding the underlying HA technology and architecture of PostgreSQL

Fundamentally, high availability with PostgreSQL databases works in two ways:

-

Streaming Replication

A replication approach in which the replica connects to the primary and continuously receives a stream of WAL records. Streaming replication lets the replica stay more up-to-date with the primary, compared to log-shipping replication.

Replica node infrastructure is independent from the primary, with each node requiring dedicated compute and storage resources. Replica nodes can be used as hot standbys to serve read-only client queries, allowing load-balancing across the primary and the replicas. -

Synchronous Streaming Replication

Databases can also configure streaming replication as synchronous by choosing one or more replicas to be synchronous standby.

In this case, the primary doesn’t confirm a transaction commit until after the replica acknowledges the transaction persistence. This method provides increased durability but leads to higher transaction latency.

What about the high availability architecture with PostgreSQL? At the most basic level, data tier HA consists of:

This underlying architecture is then combined with various tools and approaches to minimize downtime in the event of infrastructural and zonal outages.

- A mechanism to identify if and when a primary node experiences failure

- A failover process in which a replica node is promoted to a primary node

- A method to change the query routing so that the application requests can reach the new primary node

- A way to fall back to the original architecture using pre-failover primary and replica nodes in their original capacities (this is optional, but good to have)

Important things to consider

For businesses, when choosing between approaches, it’s important to balance commit latency and durability according to their business needs.

Similarly, businesses should select an HA architecture that can perform manual failovers or switchovers with the production infrastructure. This is because of the time and manual efforts needed to prepare a new standby environment for subsequent failover or fallback.

Otherwise, the system can only withstand one failure, and the service won’t have protection from an SLA violation. Remember: what HA architecture you get depends on the three 9s, four 9s, or five 9s uptime you need to achieve based on your SLA.

Get to know the expert-designed tools essential to run a HA system with PostgreSQL

One thing needs to be made certain: businesses should not consider building their own failover system.

Sure, a homegrown HA system can be tailored to your business environment, but there are far too many variables, risks, and gaps to address before it becomes viable. Does it account for false failovers and split brains? Is it tested for different implementations? Will you get the support you need? It’s far too difficult, too time-consuming, to account for them all.

Community or commercial tools, on the other hand, are exclusively designed by PostgreSQL experts, in a PostgreSQL-specific paradigm. Many issues and edge cases would have been discovered and addressed that a custom system would not have.

Here are the key tools and technologies to consider:

-

Repmgr

One of the more “traditional” failover systems, Repmgr started life as a system for creating PostgreSQL replicas more easily. It’s written in C and uses a customer Raft-like consensus, which means it needs at least three nodes to operate. While it supports Witness nodes, each Witness stores its data in a local independent PostgreSQL database, making it likely more difficult to deploy. Repmgr offers hooks to handle custom fencing strategies, failover consensus, and other advanced functionality. This usually requires extensive manual scripting and is mainly recommended for more advanced users.

-

Patroni

Patroni is the first “modernized” failover system. Written in Python, it doesn’t rely on quorum - rather, it defers consensus handling to an external consensus layer like etcd, and employs a leadership lease that may only be held by one node at a time. Patroni publishes node status via a REST API that load balancers can directly poll. This means traffic control is implicitly tied to node status, and since only one node can ever hold the leadership lease, a split brain is not possible. Any new node joining the cluster will either sync from the current leader or rewind if it was previously the primary node.

-

Pg_auto_failover

Rather than relying on consensus, the pg_auto_failover HA tool employs a sophisticated state machine where a single Monitor process makes decisions for the entire cluster, making it a bit of an outlier. While this Monitor process essentially guarantees a non-PostgreSQL Witness exists in the cluster, it also gives the HA system a single point of failure. That said, it’s also the only HA system that currently supports distributed PostgreSQL systems like Citus, where a multitude of “worker” nodes act as sharded data stores for a coordinator node. This requires a complicated multi-layer mechanism to handle failover for multiple clusters simultaneously and is an otherwise ignored edge case.

Much like the HA systems above, businesses can also rely on available backup tools rather than build their own. Custom ones may not properly handle WAL files or Point In Time Recovery (PITR), among other concerns. Backup tools designed specifically for PostgreSQL HA systems by experts include:

The decision to use one or the other often boils down to preference, but both tools offer incremental backup, parallel backup and restore, integrated WAL management, PITR handling, encryption, compression, cloud storage integration, and much more.

Fundamental limitations that can affect HA systems and risk violating SLAs

As discussed above, PostgreSQL users commonly rely on physical streaming replication-based high availability architecture to achieve High Availability. This is then combined with various tools such as Patroni or Repmgr to enable automatic failover or switchover, ensuring the availability of Postgres in production.

PostgreSQL’s Native Logical Replication (or PNLR for short) has a few fundamental limitations that can affect HA systems. The examples include but are not limited to:

-

Data Definition Language (DDL) operations are not replicated

Operations such as creating and dropping tables need to be performed independently on each node. Since transaction consistency cannot be ensured in DDL operations, additional maintenance windows, during which the database receives no traffic, are required. This detracts from the system’s ability to be highly available.

-

There is no ability to failover

If either the source or the target node goes down during a heavy period of replication traffic, there is a significant risk that the entire replication process for a given set of tables will need to be restarted, and previously replicated data will need to be resent. Therefore, PNLR servers are at greater risk than PostgreSQL physical replicas for having significant data loss when taking over as the primary data source for an application as a result of the current primary data source failing.

-

Logical replication systems require that each row in a replicated table have a primary key.

This is to properly identify updated and deleted rows during replication. The primary key needs to be unique across all nodes in the cluster. PostgreSQL native logical replication provides no built-in mechanism to ensure that newly created and unique records on different nodes don’t have the same primary key. The risk is that completely different records in a cluster are represented as the same record. Creating the assurance of unique primary keys is entirely the responsibility of the application developer, making it more error-prone.

-

PNLR is not integrated with backup and recovery solutions.

-

PNLR does not come with best practices and proven architectures for achieving common tasks.

This includes procedures to use PLNR for upgrades and maintenance operations. Such operations need to be built and extensively tested for each deployment. PNLR only replicates in one direction. Therefore, when used in an upgrade scenario, if things go wrong after the upgrade and you encounter an issue, going back to a previous version of the database software is very complex and potentially impossible if not properly planned for in advance.

-

PNLR only replicates in one direction.

Therefore, when used in an upgrade scenario, if things go wrong after the upgrade and you encounter an issue, going back to a previous version of the database software is very complex and potentially impossible if not properly planned for in advance.

Taking the above into account, this means that certain crucial activities can still compromise high availability and risk violating uptime SLAs when using physical streaming replication. They include:

- Database maintenance operations

- Patching the system

- Major version upgrades

- Increasing resources (CPU, memory, disk space, etc)

- Unplanned outages

- Configuration and parameters changes that require a restart

Going beyond standard PostgreSQL high availability features to achieve 99.999% uptime

To address the challenges of physical streaming replication-based high availability architecture, EDB sought to rethink the approach to achieving high availability and developed technology that could take PostgreSQL’s features further.

Enter EDB Postgres Distributed (PGD). EDB PGD uses Postgres' logical replication capability to achieve extreme high availability (up to “five 9s”, or 99.999%) uptime while allowing critical database activities to continue without affecting productivity and availability.

EDB PGD architecture promotes extreme high availability for your database through various techniques:

-

Automatic Failover/Switchover:

EDB PGD provides a different approach to cluster management than tools like Repmgr and Patroni, which use physical streaming replication. With EDB PGD, there is no need to promote a node in the cluster because it uses a consensus layer to identify the new primary node and redirect connections accordingly.

-

Uninterrupted online database maintenance:

In EDB PGD, all nodes receive logical changes from each other, providing users with the ability to carry out complex maintenance tasks without affecting availability. This allows maintenance operations to be performed on shadow primaries (non-lead primary nodes). Then, a switchover can be performed to carry out the same primary activity on the lead, preventing an impact on businesses and customers.

-

Patching the system/Postgres with no impact:

EDB PGD enables updating or patching the system while minimizing the impact on the availability of EDB PGD clusters, much like database maintenance. This means you can start by patching the non-lead primary nodes in EDB PGD and then proceed with the switchover to perform the same operation on the lead primary.

-

In-place major version upgrade:

Unlike physical streaming replication, EDB PGD allows nodes of different versions to coexist in a cluster. This feature will enable users and administrators to upgrade nodes individually to a major version and perform the same operation on the lead primary after the switchover.

-

Increasing resources on the system:

When additional resources are added to the system, the Postgres parameters must be adjusted to utilize the newly available resources. Unlike physical streaming replication, EDB PGD allows users to configure different settings for parameters on different nodes.

A look into Active-Active architecture and how it drives data resiliency

EDB Postgres Distributed is the first to deliver Active-Active architecture.

“Active-Active” architecture, or Geo-Distributed Active Architecture, is a data resiliency architecture that distributes database information over geographically distributed nodes and clusters. It is a network of separate processing nodes with access to a common replicated database. All nodes can participate in a typical application, which means local low latency with each region capable of running in isolation.

EDB PGD uses Postgres' logical replication capability and empowers users to achieve up to five 9s, i.e. 99.999% uptime, while allowing them to perform certain activities critical for their database optimization, such as updates, upgrades, and maintenance.

It does so through two key EDB PGD components:

-

PGD Proxy

Plays a crucial role in routing a connection to the lead primary while forwarding/re-routing connections to the elected lead primary in case something happens to the current primary taking all the load. -

Bi-Directional Replication (BDR)

Ensures the data on all nodes is synchronized and responsible for replicating DDLs and other objects.

With this, in addition to providing extreme high availability, EDB Postgres Distributed can also:

-

Distribute workloads geographically:

EDB PGD can also be used to distribute the workloads on the database based on geography. For example, if you have a three-node EDB PGD architecture and these nodes are spread across the globe, you can use each country's local database to manage the respective countries' workload.

-

Provide data localization security:

Advanced logical replication in EDB PGD also allows you to choose access rights and maintain data sovereignty—protecting your organization and limiting threats.

Access Our Resources on EDB PostgreSQL Distributed

Learn how PostgreSQL Distributed achieves extreme High Availability to maximize efficiency and reduce risk

Learn the power of EDB PostgreSQL Distributed through 3 Must-Read Success Stories

How do you achieve near-zero downtime? In this webinar, Gianni Ciolli, VP of Solutions Architecture and Field CTO, discusses achieving up to five 9s availability on PostgreSQL implementation.

Delve further into the capabilities of EDB Postgres Distributed – from multi-master replication to advanced conflict management – in this in-depth whitepaper.

PostgreSQL provides the foundation necessary to have high availability. This includes configurations for physical and logical replication and consistent physical backups with PITR or point-in-time recovery capabilities. High availability can be achieved or maximized for single database instances and multi-master PostgreSQL deployment options.

PostgreSQL does not offer a complete HA solution, necessitating other open-source extensions and tools to achieve HA uptimes. To achieve HA in PostgreSQL, there must be three key aspects: redundancy, no single point of failure (SPOF), and failover.

Generally, high availability is facilitated by three primary mechanisms:

- Clustering: Involves grouping multiple nodes to work together as a single, unified system.

- Load balancing: Distributing incoming network traffic across a group of backend servers, spreading the work evenly to prevent any single server from overloading or becoming a bottleneck.

- Replication: The process of copying and maintaining database objects across multiple servers or sites, allowing applications to access data reliably and quickly.

Both are crucial components of a secure and comprehensive data protection strategy, but they are fundamentally different. Backup works by creating copies of data and storing them separately from a primary system, acting as a safety net and a restore point for when data loss, corruption, or system failures happen.

PostgreSQL does not offer a complete HA solution, necessitating other open-source extensions and tools to achieve HA uptimes. To achieve HA in PostgreSQL, there must be three key aspects: redundancy, no single point of failure (SPOF), and failover.

Reliability aims to minimize system failures and downtime, while availability is about maximizing operational time. Reliability quantifies the likelihood of a system or equipment to operate as intended without disruptions or downtime, focused on the success and dependability of the asset to continue working without failures. Availability is focused on maintaining operations in the event of failure.

A distributed database stores data on multiple servers or in a cluster of computers consisting of individual nodes. These nodes are geographically separate and may consist of virtual machines within a cloud database or physical computers.

Failover is the process of switching or transferring from a primary system to a secondary or backup system when the primary fails. Essentially, the secondary system takes over the workload of the failed primary system, therefore eliminating or reducing the impact on users when a system failure occurs.

In short, a failover is the process of transferring from a primary to a secondary system during a failure. A switchover, on the other hand, is when these changes happen in a planned way. In this scenario, the primary would be cleanly shut down, and the secondary would be promoted to be the primary as part of a scheduled, predetermined process.

Asynchronous replication copies data to other PGD cluster members after the transaction completes on the origin node. It can provide higher performance and lower latency than synchronous replication.

PostgreSQL itself does not provide built-in tools for detecting server failures. There are, thankfully, numerous tools available that enable Automatic Failover, which can help detect failures and automatically switch to the standby system, thus minimizing database downtime. We covered these technologies in Section 5.

Yes, we do. Failover Manager (EFM) is a tool for managing Postgres database clusters. It enables high availability of primary standby deployment architectures using streaming replication. You can use EFM with PostgreSQL or EDB Postgres Advanced Server.

Patroni is a failover system and cluster manager that can customize and automate the deployment and maintenance of high availability in PostgreSQL. It can be thought of as a template for creating a customized high availability solution. Key benefits include continuous monitoring, automatic failover, manual/scheduled switchover (with a single command), REST APIs for entire cluster configuration, and more. Click here to learn how to install Patroni on PostgreSQL.

We have different plans to accommodate various business and availability needs. Compare our plans here.

EDB Postgres Distributed (PGD) provides multi-master replication and data distribution with advanced conflict management, data-loss protection, and throughput up to 5X faster than native logical replication. It enables distributed Postgres clusters with high availability up to five 9s.

By default, EDB Postgres Distributed uses asynchronous replication, applying changes on the peer nodes only after the local commit. You can configure additional levels of synchronicity between different nodes, groups of nodes, or all nodes by configuring Group Commit, CAMO or Eager Replication.

EDB PGD is compatible with PostgreSQL, EDB Postgres Extended Server, and EDB Postgres Advanced Server and is deployed as a standard Postgres extension named BDR. Supported version combinations are listed here:

.png)

Yes! You can try EDB Postgres Distributed on EDB BigAnimal. EDB BigAnimal makes it easy to set up, manage, and scale your databases. The addition of distributed high-availability support powered by EDB Postgres Distributed (PGD) enables single- and multi-region Always On Gold clusters. Get started for free.

The architectures for EDB Postgres Distributed can be configured depending on your business needs. They range from single-location architectures to complex distributed systems that protect from hardware and data center failures. The architectures reflect EDB’s Trusted Postgres architectures, encapsulating practices that help users achieve the highest possible service availability in multiple configurations.

The Always On architecture configurations include Single Data Location, Two Data Locations, Two Data Locations + Witness, and Three or More Data Locations. Regardless of which you choose, all architectures provide hardware failure protection, zero downtime upgrades, and support availability zones in public and private clouds. You can use EDB PGD for architectures beyond the examples listed above. Note that use-case-specific variations have been successfully deployed in production. However, these variations must first undergo a rigorous architecture review.

EDB PGD is intended to be deployed in one of a small number of known-good configurations, using either Trusted Postgres Architect or a configuration management approach and deployment architecture approved by Technical Support. Manual deployment isn't recommended and might not be supported.

There are multiple methods to deploy and install EDB Postgres, which you can find in detail here.

Interested in EDB PostgreSQL Distributed?

Looking to preserve uptime for mission-critical applications without exorbitant costs?

Reach out to us with your requirements, and let’s get you started toward a more flexible, cost-efficient, and scalable database management solution.