Introduction

refdata TAM is an optional storage extension included in the EDB Advanced Storage Pack.

refdata TAM is an alternative data table storage designed to provide a performance boost on mostly static data, especially when the table using this TAM is referenced by other tables through foreign keys. refdata avoids unnecessary row-level locks during some read-only operations.

The refdata TAM is designed to work with static data, that is, data that requires minimal INSERT and very few DELETE and UPDATE commands.

Some common examples of static data are:

- Postal codes

- Transaction codes

- Language codes

- Currencies

- Financial hierarchies

- Organizational unit types

- State or country codes

- Customer segments

- Tasks and business processes

A transaction that modifies a refdata table uses a table-level ExclusiveLock, which blocks both concurrent modifications by another session and modifications to tables that reference the refdata table.

For more information about using refdata TAM and the EDB Advanced Storage Pack: https://www.enterprisedb.com/docs/pg_extensions/advanced_storage_pack/using/#refdata-example

In this blog post, we will discuss the tests performed by the Performance Engineering and Architecture Team (PEAT) at EDB to evaluate the performance impact of using refdata TAM vesus using heap only storage in the OLTP context.

Impact on Performance

Benchmark Description

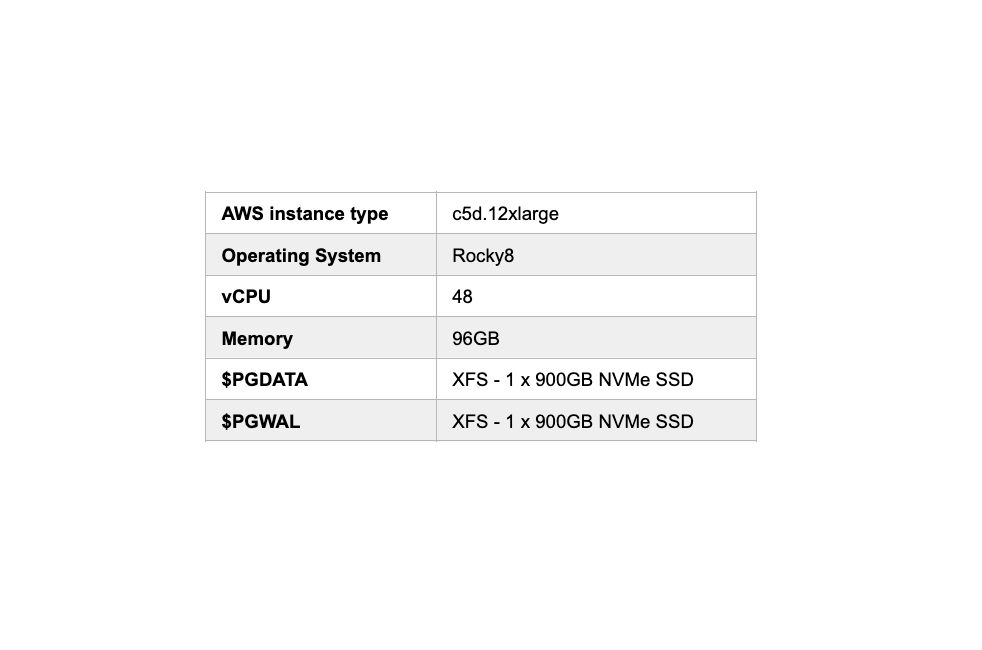

The workload used in this benchmark is TPROC-C (HammerDB) with two databases populated by 2000 warehouses (~200GB of data). The target database engine is PostgreSQL in version 15. Note: At the time when this benchmark was performed, ASP packages for EPAS 15 did not contain all the latest optimizations for the refdata TAM.

There are two tests executed in this benchmark:

- long-run test (1 hour) executed with a high (75) number of virtual users against the database using heap only storage

- long-run test (1 hour) executed with a high (75) number of virtual users against the database using heap and refdata storage

These tests were run three times. We are measuring the Number of Orders Per Minute (NOPM), as well as system metrics (CPU, memory, IOs) for each test.

Using refdata in TPC-C Context

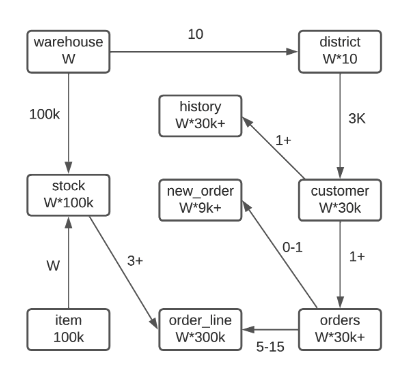

The TPROC-C benchmark produced by HammerDB is derived from the TPC-C benchmark. Its data model is composed of 9 tables.

According to TPC-C specifications, the item table appears to have all the characteristics of a reference table:

- a table size is stable, whatever the scale factor (number of warehouses) is;

- during the workload execution, no modifications are applied on the records: INSERT/UPDATE/DELETE;

- a foreign key constraint can be defined between the stock and item tables.

Foreign Key Creation

HammerDB’s TPROC-C benchmark does not use foreign key constraints. In order to define more realistic conditions, the following DDLs are executed:

ALTER TABLE district ADD CONSTRAINT fk_district_warehouse FOREIGN KEY (d_w_id) REFERENCES warehouse (w_id); ALTER TABLE customer ADD CONSTRAINT fk_customer_district FOREIGN KEY (c_w_id, c_d_id) REFERENCES district (d_w_id, d_id); ALTER TABLE history ADD CONSTRAINT fk_history_customer FOREIGN KEY (h_c_w_id, h_c_d_id, h_c_id) REFERENCES customer (c_w_id, c_d_id, c_id); ALTER TABLE history ADD CONSTRAINT fk_history_district FOREIGN KEY (h_w_id, h_d_id) REFERENCES district (d_w_id, d_id); ALTER TABLE new_order ADD CONSTRAINT fk_new_order_orders FOREIGN KEY (no_w_id, no_d_id, no_o_id) REFERENCES orders (o_w_id, o_d_id, o_id); ALTER TABLE orders ADD CONSTRAINT fk_orders_customer FOREIGN KEY (o_w_id, o_d_id, o_c_id) REFERENCES customer (c_w_id, c_d_id, c_id); ALTER TABLE order_line ADD CONSTRAINT fk_order_line_orders FOREIGN KEY (ol_w_id, ol_d_id, ol_o_id) REFERENCES orders (o_w_id, o_d_id, o_id); ALTER TABLE stock ADD CONSTRAINT fk_stock_warehouse FOREIGN KEY (s_w_id) REFERENCES warehouse (w_id); ALTER TABLE stock ADD CONSTRAINT fk_stock_item FOREIGN KEY (s_i_id) REFERENCES item (i_id);System Characteristics

PostgreSQL Tuning

The following PostgreSQL settings have been applied on all database clusters:

shared_buffers='16GB' max_connections=1000 checkpoint_completion_target='0.93' checkpoint_timeout='30min' max_wal_size='300GB' effective_cache_size='80GB' random_page_cost=1 seq_page_cost=1 effective_io_concurrency=200Results

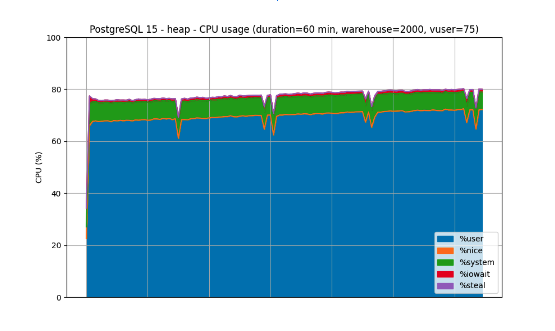

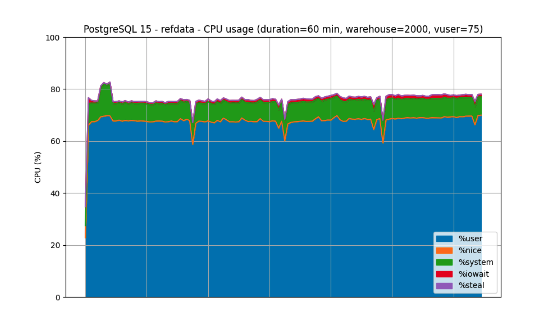

These first charts show total CPU usage measured along several metrics. As we can see, refdata has lower user and system CPU impact compared to heap storage, suggesting that refdata stores and accesses data more efficiently.

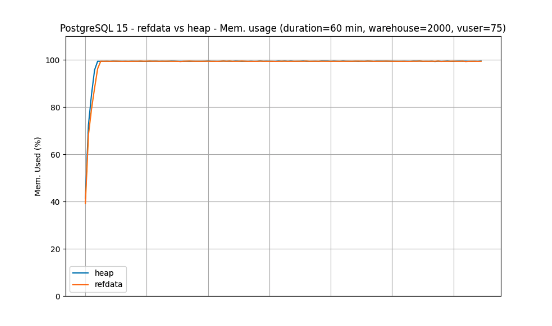

The next chart shows memory usage and indicates there is minimal difference in memory usage between refdata and heap storage. This supports the improved CPU impact conclusions as it shows the transactions were memory-bound, not CPU-bound.

CPU Usage

Memory Usage

IO

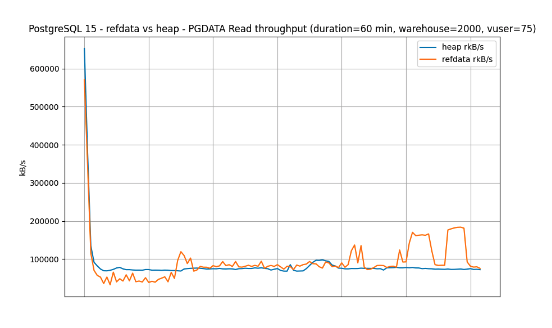

These charts show the IO throughput of refdata versus heap storage. We can see the improved READ throughput of refdata, which supports the conclusion that data can be accessed more efficiently compared to heap storage. This is further supported by the increased NOPM rate in all runs, shown in the table below. On average, refdata has a NOPM rate that is 10.3% better than heap storage.

NOPM

Final Thoughts

This benchmark shows that using refdata TAM for the item table and the relevant foreign keys in the OLTP

TPC-C like workload gives a global 10% performance boost. This boost allows for increased scalability for schemas that use foreign keys to access data. In OLTP use-cases, this increased scalability can offer faster, more reliable performance and can assist in reaching high-availability goals.