Looking to deploy PostgreSQL or EDB Advanced Server on AWS? The purpose of this blog post is to provide general sizing information for PostgreSQL and EDB Postgres Advanced Server (EPAS) when deployed on Amazon Web Services (AWS) as Infrastructure as a Server (IaaS). The “T-shirt” sizing provides core counts, CPU and workload assessments (Transactions per minute (TPM) from HammerDB) for small, medium, large and extra-large classifications. Read on for some basic sizing guidance for PostgreSQL and EPAS on AWS.

Methodology

This workload assessment is based on the TPROC-C benchmark which is an OLTP-centric workload that is driven by an open-source tool, HammerDB. HammerDB was installed and run on a separate server from the database in order to not degrade performance on the database. More information on HammerDB and the TPC-C benchmark can be found on the HammerDB website.

HammerDB was selected over pgbench because HammerDB can be utilized against many databases beyond PostgreSQL and allows for the end user to run the same HammerDB process on the existing legacy database (Oracle, DB2, SQL Server, etc.) to determine the current throughput. One of the objectives of this exercise was to provide complete transparency into the benchmark process, the settings and the results so that another party could provision the same environment and run similar tests if they chose to do so.

The T-shirt sizes along with the respective CPU core count and memory are shown in the chart below.

| T-Shirt Size | Core count | Memory |

|---|---|---|

| Small | 4 | 32 GB |

| Medium | 8 | 64 GB |

| Large | 16 | 128 GB |

| Extra Large | 32 | 256 GB |

When running the TPROC-C benchmarks with HammerDB, the following parameters were utilized:

| Value | Setting |

|---|---|

| EPAS Version | 13.1.4 |

| Operating System | CentOS 8 |

| Database Size | 120 GB |

| Concurrent User Threads | 96 |

| Storage | 500 GB |

| Ramp up Time | 5 minutes |

| Run Duration | 30 minutes |

Additionally, the EPAS database was tuned from the out of the box deployment settings with some minor variations across the T-shirt sizes. Those settings along with the default values for each of the T-shirt sizes can be found in Appendix A at the end of this post.

Although the workload assessments were performed on EPAS in a single node configuration with no replication or clustering, he performance should be consistent with utilizing community PostgreSQL with the same configuration. As a validation point, Vibhor Kumar, Vice President of Performance Engineering at EDB, demonstrated that EPAS provides nominally the same performance as PostgreSQL for the pgbench workload in the blog, Pgbench: Performance Benchmark of PostgreSQL 12 and EDB Advanced Server 12.

T-Shirt Sizing Results

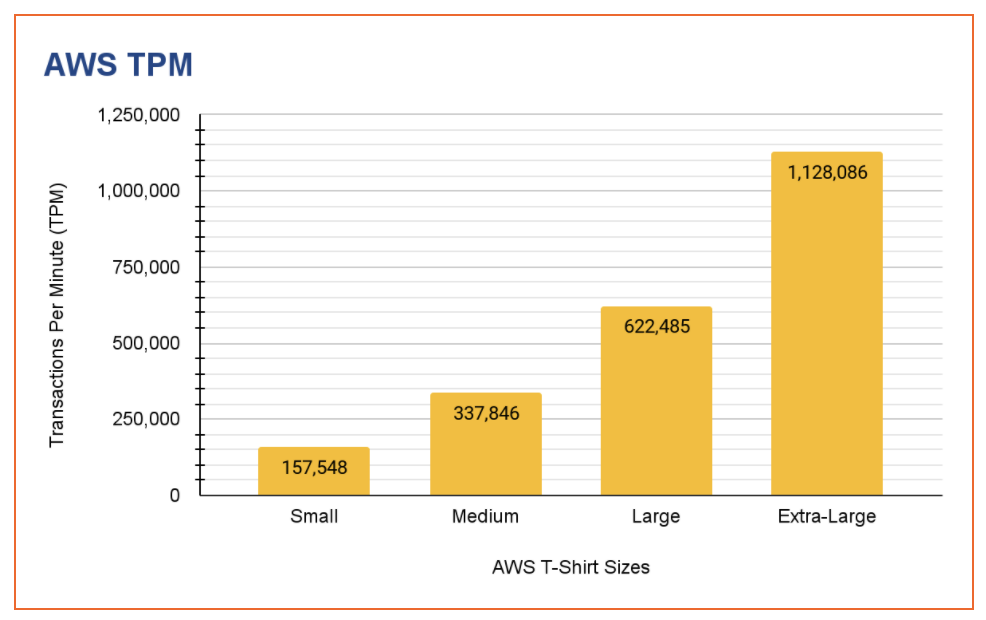

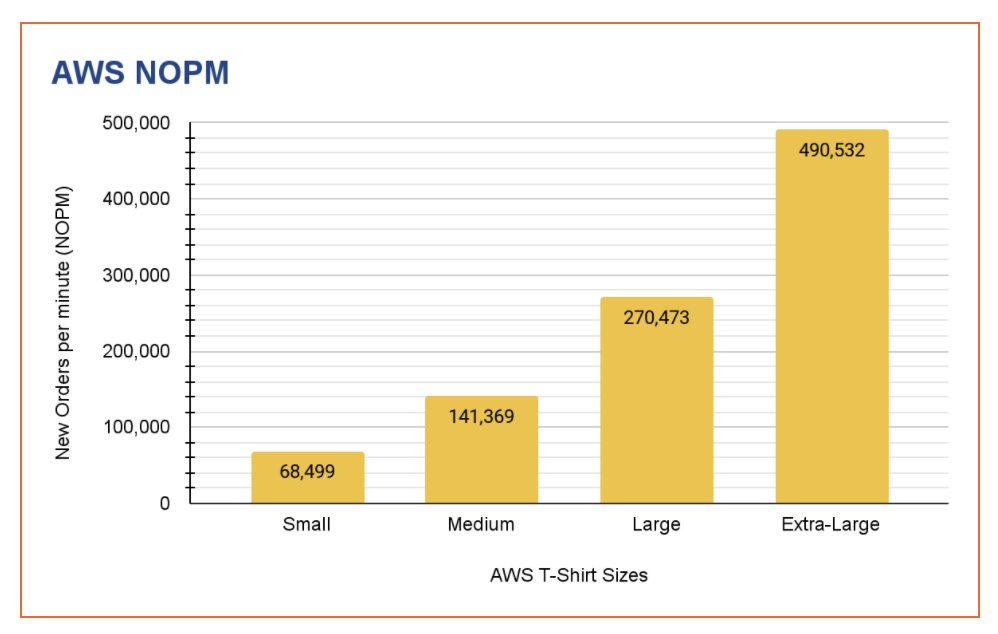

Amazon Elastic Compute Cloud (Amazon EC2) machines were provisioned and utilized for this exercise. The storage that was provisioned was the faster io2 storage which is the latest generation of the Provisioned IOPS SSD volumes from AWS. The AWS provisioned servers have the characteristics and throughput (both Transactions per Minute (TPM) and New Orders per Minute (NOPM)) shown below.

| T-Shirt Size | Provisioned Server | CPU Speed | CPU Cores | RAM | Provisioned IOPS | IOPS Utilized | TPM | NOPM |

|---|---|---|---|---|---|---|---|---|

| Small | r5n.xlarge | 3.1Ghz Intel | 4 | 32 | 10,000 | 2,500 | 157,548 | 68,499 |

| Medium | r5n.2xlarge | 3.1Ghz Intel | 8 | 64 | 10,000 | 3,300 | 337,846 | 141,369 |

| Large | r5n.4xlarge | 3.1Ghz Intel | 16 | 128 | 30,000 | 4,500 | 622,485 | 270,483 |

| Extra-Large | r5n.8xlarge | 3.1Ghz Intel | 32 | 256 | 40,000 | 5,000 | 1,128,086 | 490,532 |

Increasing or decreasing the IOPS on a provisioned server can affect the costs per transaction respectively. Settings should be chosen to provide the best performance for the cost per transaction. At times, an increase in provisioned IOPS can minimally increase the performance but the added costs may not be worth the incremental gains. It was even noted that drastically increasing IOPS could negatively impact the performance.

These results can be reproduced by using the open source tools provided by EDB with the configuration provided in the appendices:

- edb-deployment installed https://github.com/EnterpriseDB/postgres-deployment

- edb-ansible installed from https://github.com/EnterpriseDB/edb-ansible

The following graph provides a summary of the Transactions per Minute (TPM) produced utilizing HammerDB with EPAS for each of the T-shirt sizes deployed on AWS.

The next graph provides a summary of the New Orders per minute (NOPM) produced utilizing HammerDB with EPAS for each of the T-shirt sizes deployed on AWS.

Conclusion

The goal of this blog post is to provide some basic sizing guidance for PostgreSQL and EDB Advanced Server (EPAS) when deployed on Amazon Web Services (AWS). The key word is “guidance” and this is truly a situation where “your mileage may vary” depending on your workload and your deployment of PostgreSQL or EDB Advanced Server. This blog post should be used as a guide to start the planning for cloud deployment and then revised for your specific deployment and utilization. It is expected that you might run HammerDB against your existing system to obtain baseline performance and compare that to the throughput shown for AWS to determine the appropriate starting point or T-shirt size for your PostgreSQL or EPAS deployment.

Appendix A -

PostgreSQL configuration settings

The following settings were utilized to optimize the results for this benchmarking and sizing effort:

| Setting | Default | Small | Medium | Large | Extra Large |

|---|---|---|---|---|---|

| archive_command | (disabled) | /bin/true | /bin/true | /bin/true | /bin/true |

| archive_mode | off | on | on | on | on |

| autovacuum_max_workers | 3 | 5 | 5 | 5 | 5 |

| autovacuum_vacuum_cost_limit | -1 | 3000 | 3000 | 3000 | 3000 |

| checkpoint_completion_target | 0.5 | 0.9 | 0.9 | 0.9 | 0.9 |

| checkpoint_timeout | 300 | 900 | 900 | 900 | 900 |

| cpu_tuple_cost | 0.01 | 0.03 | 0.03 | 0.03 | 0.03 |

| DateStyle | ISO, MDY | Redwood, SHOW_TIME | Redwood, SHOW_TIME | Redwood, SHOW_TIME | Redwood, SHOW_TIME |

| db_dialect | postgres | redwood | redwood | redwood | redwood |

| dbms_alert.max_alerts | 100 | 100 | 100 | 100 | |

| dbms_aq.batch_size | 10 | 10 | 10 | 10 | |

| dbms_aq.launch_delay | 1000 | 1000 | 1000 | 1000 | |

| dbms_aq.max_databases | 1024 | 1024 | 1024 | 1024 | |

| dbms_aq.max_idle_time | 30000 | 30000 | 30000 | 30000 | |

| dbms_aq.max_pending_retries | 1024 | 1024 | 1024 | 1024 | |

| dbms_aq.max_workers | 4 | 4 | 4 | 4 | |

| dbms_aq.min_work_time | 10000 | 10000 | 10000 | 10000 | |

| dbms_pipe.total_message_buffer | 30 | 30 | 30 | 30 | |

| default_text_search_config | pg_catalog.simple | pg_catalog.english | pg_catalog.english | pg_catalog.english | pg_catalog.english |

| edb_redwood_date | off | on | on | on | on |

| edb_redwood_greatest_least | off | on | on | on | on |

| edb_redwood_strings | off | on | on | on | on |

| edb_wait_states.directory | edb_wait_states | edb_wait_states | edb_wait_states | edb_wait_states | |

| edb_wait_states.retention_period | 604800 | 604800 | 604800 | 604800 | |

| edb_wait_states.sampling_interval | 1 | 1 | 1 | 1 | |

| effective_cache_size | Varies based on size | 557056 | 4841216 | 4841216 | 4841216 |

| idle_in_transaction_session_timeout | 0 | 600000 | 600000 | 600000 | 600000 |

| index_advisor.enabled | on | on | on | on | |

| index_advisor.max_candidates | 16384 | 16384 | 16384 | 16384 | |

| lc_messages | en_US.UTF-8 | en_US.UTF-8 | en_US.UTF-8 | en_US.UTF-8 | |

| lc_monetary | C | en_US.UTF-8 | en_US.UTF-8 | en_US.UTF-8 | en_US.UTF-8 |

| lc_numeric | C | en_US.UTF-8 | en_US.UTF-8 | en_US.UTF-8 | en_US.UTF-8 |

| lc_time | C | en_US.UTF-8 | en_US.UTF-8 | en_US.UTF-8 | en_US.UTF-8 |

| listen_addresses | localhost | * | * | * | * |

| log_autovacuum_min_duration | -1 | 0 | 0 | 0 | 0 |

| log_directory | log | /var/log/edb | /var/log/edb | /var/log/edb | /var/log/edb |

| log_line_prefix | %m [%p] | %m [%p%l] %u@%d app=%a | %m [%p%l] %u@%d app=%a | %m [%p%l] %u@%d app=%a | %m [%p%l] %u@%d app=%a |

| log_lock_waits | off | on | on | on | on |

| log_min_duration_statement | -1 | 1000 | 1000 | 1000 | 1000 |

| log_temp_files | -1 | 0 | 0 | 0 | 0 |

| log_timezone | GMT | UTC | UTC | UTC | UTC |

| logging_collector | off | on | on | on | on |

| maintenance_work_mem | 1048576 | 966656 | 1048576 | 1048576 | 1048576 |

| max_connections | 100 | 300 | 300 | 300 | 300 |

| max_wal_size | 1024 | 102400 | 102400 | 102400 | 102400 |

| password_encryption | md5 | scram-sha-256 | scram-sha-256 | scram-sha-256 | scram-sha-256 |

| pg_stat_statements.max | 5000 | 5000 | 5000 | 5000 | |

| pg_stat_statements.save | on | on | on | on | |

| pg_stat_statements.track | top | top | top | top | |

| pg_stat_statements.track_utility | on | on | on | on | |

| primary_slot_name | primary1 | primary1 | primary1 | primary1 | |

| random_page_cost | 4 | 1.1 | 1.1 | 1.1 | 1.1 |

| shared_buffers | 1048576 | 3145728 | 3145728 | 3145728 | 3145728 |

| shared_preload_libraries | $libdir/dbms_pipe, $libdir/edb_gen, $libdir/dbms_aq, $libdir/edb_wait_states, $libdir/sql-profiler, $libdir/index_advisor, $libdir/pg_stat_statements | $libdir/dbms_pipe, $libdir/edb_gen, $libdir/dbms_aq, $libdir/edb_wait_states, $libdir/sql-profiler, $libdir/index_advisor, $libdir/pg_stat_statements | $libdir/dbms_pipe, $libdir/edb_gen, $libdir/dbms_aq, $libdir/edb_wait_states, $libdir/sql-profiler, $libdir/index_advisor, $libdir/pg_stat_statements | $libdir/dbms_pipe, $libdir/edb_gen, $libdir/dbms_aq, $libdir/edb_wait_states, $libdir/sql-profiler, $libdir/index_advisor, $libdir/pg_stat_statements | |

| sql_profiler.enabled | on | on | on | on | |

| sql_profiler.explain_format | text | text | text | text | |

| sql_profiler.use_array_for_paramas | off | off | off | off | |

| ssl | off | on | on | on | on |

| ssl_ca_file | /pgdata/pg_data/root.crt | /pgdata/pg_data/root.crt | /pgdata/pg_data/root.crt | /pgdata/pg_data/root.crt | |

| ssl_cert_file | server.crt | /pgdata/pg_data/server.crt | /pgdata/pg_data/server.crt | /pgdata/pg_data/server.crt | /pgdata/pg_data/server.crt |

| ssl_crl_file | /pgdata/pg_data/root.crl | /pgdata/pg_data/root.crl | /pgdata/pg_data/root.crl | /pgdata/pg_data/root.crl | |

| ssl_key_file | server.key | /pgdata/pg_data/server.key | /pgdata/pg_data/server.key | /pgdata/pg_data/server.key | /pgdata/pg_data/server.key |

| timed_statistics | on | off | off | off | off |

| TimeZone | GMT | UTC | UTC | UTC | UTC |

| unix_socket_directories | /tmp | /var/run/edb | /var/run/edb | /var/run/edb | /var/run/edb |

| wal_buffers | 2048 | 2048 | 8192 | 8192 | 8192 |

| wal_compression | off | on | on | on | on |

| wal_keep_segments | 0 | 10 | 10 | 10 | 10 |

| wal_log_hints | off | on | on | on | on |

| work_mem | 65536 | 26624 | 449536 | 449536 | 449536 |

Appendix B -

edb-deployment JSON Settings for EDB Postgres Advanced Server and Hammerdb Server on AWS

| SMALL CONFIGURATION | MEDIUM CONFIGURATION | LARGE CONFIGURATION | XL CONFIGURATION |

|---|---|---|---|

| "{ ""postgres_server"": { ""instance_type"": ""r5n.xlarge"", ""volume"": { ""type"": ""gp2"", ""size"": 50, ""iops"": 2500 }, ""additional_volumes"": { ""count"": 2, ""type"": ""io2"", ""size"": 500, ""iops"": 10000, ""encrypted"": false } }, ""hammerdb_server"": { ""instance_type"": ""m5n.xlarge"", ""volume"": { ""type"": ""gp2"", ""size"": 100, ""iops"": 1000 } } }" | "{ ""postgres_server"": { ""instance_type"": ""r5n.2xlarge"", ""volume"": { ""type"": ""gp2"", ""size"": 50, ""iops"": 250 }, ""additional_volumes"": { ""count"": 2, ""type"": ""io2"", ""size"": 500, ""iops"": 10000, ""encrypted"": false } }, ""hammerdb_server"": { ""instance_type"": ""m5n.xlarge"", ""volume"": { ""type"": ""gp2"", ""size"": 100, ""iops"": 1000 } } }" | "{ ""postgres_server"": { ""instance_type"": ""r5n.4xlarge"", ""volume"": { ""type"": ""gp2"", ""size"": 50, ""iops"": 250 }, ""additional_volumes"": { ""count"": 2, ""type"": ""io2"", ""size"": 500, ""iops"": 10000, ""encrypted"": false } }, ""hammerdb_server"": { ""instance_type"": ""m5n.xlarge"", ""volume"": { ""type"": ""gp2"", ""size"": 100, ""iops"": 1000 } } }" | "{ ""postgres_server"": { ""instance_type"": ""r5n.8xlarge"", ""volume"": { ""type"": ""gp2"", ""size"": 50, ""iops"": 250 }, ""additional_volumes"": { ""count"": 2, ""type"": ""io2"", ""size"": 500, ""iops"": 30000, ""encrypted"": false } }, ""hammerdb_server"": { ""instance_type"": ""m5n.xlarge"", ""volume"": { ""type"": ""gp2"", ""size"": 100, ""iops"": 1000 } } }" |