Can I use PostgreSQL in Kubernetes and expect to achieve performance results of the storage that are comparable to traditional installations on bare metal or VMs? In this article I go through the benchmarks we did in our own Private Cloud based on Kubernetes 1.17 to test the performance of local persistent volumes using OpenEBS Local PV.

I have been working with databases for more than 20 years now. Most of them with PostgreSQL and, professionally, with 2ndQuadrant as an early member. I have been head of Global Support for a few years and for a few months I have been leading the Cloud Native initiative at 2ndQuadrant with the goal to bring our services and products in the Kubernetes sphere.

We are the first PostgreSQL company to become Kubernetes Certified Service Provider. We recently released our first operator for BDR so that our customers can now integrate their Cloud Native applications with a multi-master database having self-healing properties and high availability. And this is just our first mark – small but important, I would say.

As you know, storage is a critical component in database scenarios. My personal concern of the usage of database patterns in Kubernetes for some time has been the almost inevitable usage of network storage in a pod that hosts, for example, a PostgreSQL instance. In particular, my concerns relate to the impact that network storage latency has on performance.

What we have been trying to do as a team at 2ndQuadrant is to explore the possibility to use Local Persistent Volumes. What is a Local Persistent Volume? It is storage that is mounted directly on the Kubernetes node where the pod is running.

While an anti-pattern for many Kubernetes workloads (or even heresy for purists), it is our belief that this use case will increase the adoption of a robust and versatile database like PostgreSQL in Private Cloud contexts, as well as in Public ones – even though in managed contexts network storage is still the norm.

In any case, one thing that I have learned at 2ndQuadrant is that words must always be corroborated by facts. What better judge than real benchmarking and measurements?

We decided to perform these benchmarks directly in our Private Cloud production environment, made up of 3 physical machines for the Kubernetes masters and 4 physical machines for the Kubernetes worker nodes. All our machines come with two 10Gb ethernet interfaces bonded in the same private network through two switches, for maximum throughput and bandwidth, as well as fault tolerance and load balancing. All servers run Ubuntu Linux 18.04 LTS.

As part of our hardware validation process, we must benchmark direct storage performance to determine the maximum theoretical limits. This can only be done before a server is deployed in production and these measurements are fundamental in daily operations to determine whether a system (like a database) is performing as expected or not in relation to sequential reads, sequential writes, random writes, and so on.

In particular, our goal was to determine whether Kubernetes and containerised applications pay a performance penalty over pure bare metal applications, in terms of sequential reads and sequential writes (very important for a database system like PostgreSQL).

We will focus only on the results observed on the physical machines that are used for Kubernetes workers (those where our PostgreSQL databases will be eventually running with local storage). Each worker node has the following specifications:

- 16 cores (1 CPU “Intel® Xeon® W-2145 Octa-Core Skylake W”)

- 128GB of RAM

- Operating system volume: 2x 240 GB SSD, HW RAID 1 (with BBU write cache)

- Applications data volume: 2x 3.84 TB SSD, HW RAID 1 (with BBU write cache)

All servers are equipped with Toshiba (Kioxia) KHK61RSE3T84 disks in an Adaptec ASR8405 hardware RAID controller.

We used bonnie++ to test direct storage performance of the second volume – the one to be used for application data. On multiple tests, we scored an average of 1000 MB/s for sequential reads and 500MB/s for sequential writes. The observed results confirm the performance of each disk declared by the manufacturer (Kioxia): up to 550 MB/s for sequential reads and up to 530 MB/s for sequential writes.

We then moved forward and repeated the same tests inside Kubernetes, using Kubestone, a benchmarking operator that can be used to evaluate the performance of an installed Kubernetes cluster. We adopted OpenEBS as a storage solution and used their Local PV based on hostpath.

We performed several tests using fio, a popular open source tool for storage benchmarking, on one selected worker node. We observed remarkable variability in the results depending on the I/O parameters we used.

Changing settings like ioengine, iodepth and block size, we were able to reach:

- 964 MB/s in sequential reads (-3.6% from bare metal)

- 496 MB/s in sequential writes (-0.8% from bare metal)

It is worth mentioning that we are just using 2 physical disks in this RAID1 setup: imagine the potential of using more powerful servers and storage, including more disks or even dedicated DAS or SANs for maximum performance.

We then set up an OpenEBS cStor Pool on the other 3 Kubernetes worker nodes and decided to compare the results between a Local Persistent Volume and a shared Persistent Volume. Even on the cStor case we encountered a lot of variability in results and, by tuning the different knobs for ioengine, iodepth and block size we were able to reach:

- 54 MB/s in sequential reads (-94.6% from bare metal)

- 54 MB/s in sequential writes (-89.2% from bare metal)

The following is an example of a Kubestone benchmarking job that we have used in the process:

apiVersion: perf.kubestone.xridge.io/v1alpha1

kind: Fio

metadata:

name: fio-seq-write-libaio-depth16

namespace: kubestone

spec:

cmdLineArgs: --name=seqwrites --rw=write --direct=1 --size=10G --bs=8K --ioengine=libaio --iodepth=16

image:

name: xridge/fio:3.13-r1

volume:

persistentVolumeClaimSpec:

storageClassName: openebs-hostpath

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 50Gi

volumeSource:

persistentVolumeClaim:

claimName: GENERATED

A summary of the observed results is available in the following table:

| Workload | Disks (as declared by the manufacturer) | Bare metal | OpenEBS Local Persistent Volume | OpenEBS cStor Pool volume |

|---|---|---|---|---|

| Sequential reads | Up to 550MB/s | 1000MB/s | 964MB/s | 54MB/s |

| Sequential writes | Up to 530MB/s | 500MB/s | 496MB/s | 54MB/s |

Our efforts though do not end here. Our objective is to continue our investigation on the cStor side and exclude or reduce the risk of configuration/tuning issues (happy to receive comments and feedback from the readers). In any case, the results we obtained on Local Persistent Volumes are more than encouraging, and fantastic news for our ultimate goal: break the barrier for high performing PostgreSQL database usage in Kubernetes.

As Local Persistent Volumes have been introduced in March 2019 in Kubernetes 1.14, we expect/hope to see lots of improvements in this area. We are planning to benchmark other storage solutions in the future, including Portworx and topolvm.

We are also planning to perform further tests on disks that are encrypted at rest with LUKS, an important requirement for GDPR compliance. An initial run of tests we have done has shown some expected penalty in both reads and writes, probably due to the CPU acting as a bottleneck.

In the next few weeks we will publish some benchmarks about our Cloud Native PostgreSQL Operator and Cloud Native BDR Operator on the same Local Persistent Volumes described in this article.

What is important to note is that Kubernetes empowers organisations around the world to devise and implement their own Private Cloud infrastructure, retaining full control on their data and processes while benefiting from velocity enhancements that both Cloud Native and DevOps offer today.

At 2ndQuadrant we believe that PostgreSQL is truly the most amazing database in the world. It has been like that on bare metal and virtual machine environments.

Our legacy is to contribute to make it the best for Cloud Native workloads too.

(I would like to thank all my fantastic teammates, and in particular Francesco Canovai and Jonathan Gonzalez for designing and running the benchmarks)

Learn more about 2ndQuadrant’s Kubernetes Operators for HA PostgreSQL and BDR here.

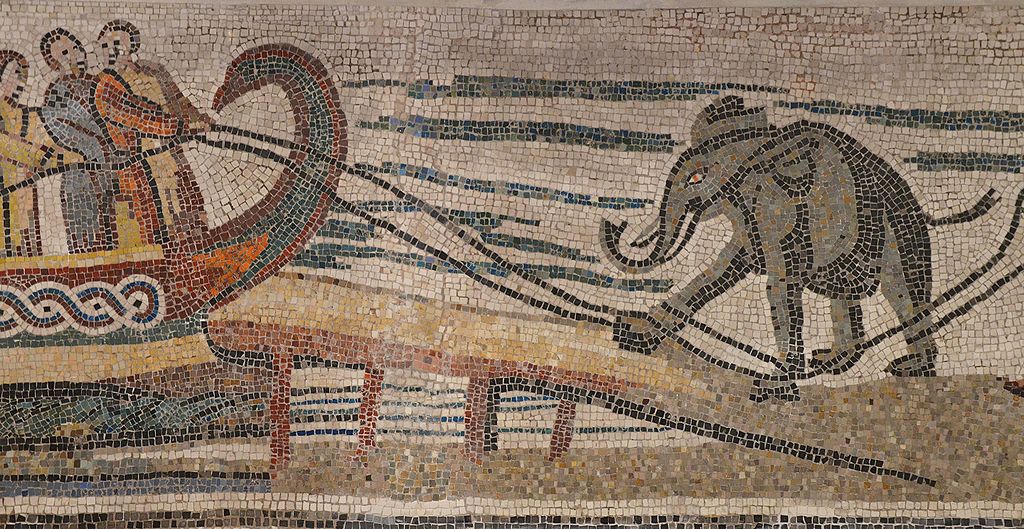

Picture by Carole Raddato from FRANKFURT, Germany / CC BY-SA