Artificial intelligence (AI) is transforming the world and changing how information is captured, processed, stored, analyzed, and used. Understanding the terminology, the different types of AI, and how the technology works is critical for leveraging its strengths and capabilities for driving business objectives. In our new blog series that starts with this blog, we’ll define the terminology, outline the AI landscape, and highlight how a Postgres data and AI platform can unlock the near-infinite potential of this revolutionary technology.

We’ll start by defining artificial intelligence. Artificial intelligence generally refers to the simulation of human intelligence processes by machines, especially computer systems. AI systems are designed to perform tasks that typically require human intelligence, such as visual perception, speech recognition, decision-making, and language translation.

Without data, there is no AI. That’s why, when we talk about the core of AI, we’re often talking about databases and lakehouses. Achieving enterprise-level results for AI projects calls for storing your database in an enterprise data management environment such as Postgres, which provides a solid foundation for AI workloads.

EDB Chief Architect for Analytics and AI, Torsten Steinbach outlines the data landscape Watch video

What are the different types of AI?

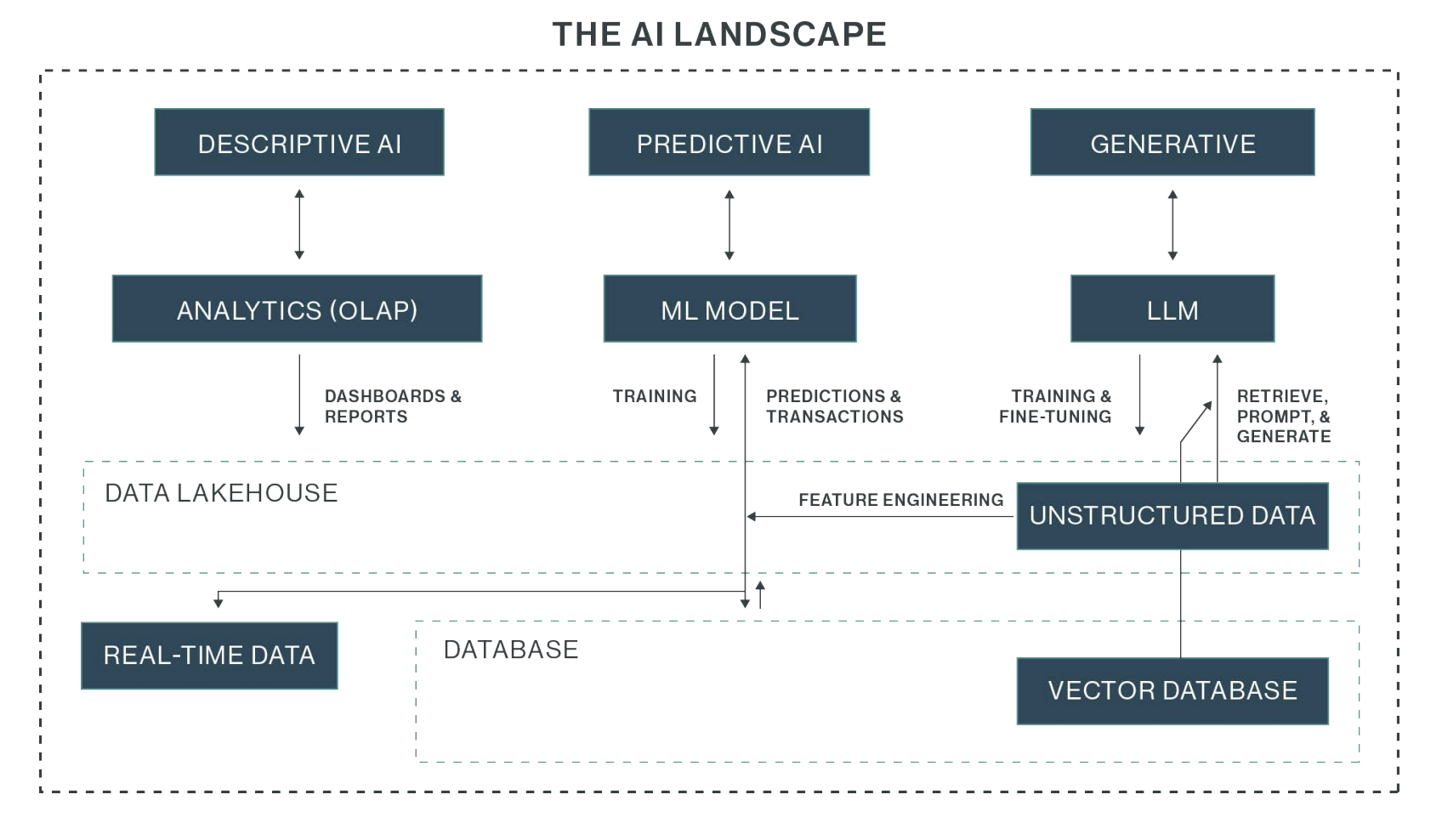

AI systems can be categorized into three main types based on their capabilities: descriptive AI, predictive AI, and generative AI.

Descriptive AI, often referred to as business intelligence (BI), uses analytic technologies to run queries and perform online analytical processing (OLAP) on data to generate dashboards, reports, and more.

Predictive AI involves making predictions using machine learning (ML) models. To create these models, data from a data lakehouse is used for training. Once trained, the ML models are employed to make predictions in real time as new data arrives, whether it’s a credit card transaction flagged for potential fraud or an incoming data stream that requires immediate analysis.

Generative AI also involves models, specifically large language models (LLMs) or foundation models trained on large volumes of data.

While these forms of AI are different, all three come together on the database layer, where structured and unstructured data converge. Databases like EDB Postgres are the foundation of AI, the mechanism that accelerates and enables timely data retrieval so AI can do what it does best.

Structured data vs. unstructured data

Structured and unstructured data are both leveraged in AI processes. Both forms of data contain valuable insights that organizations want to unlock. But both types need to be handled differently.

Structured data can be easily stored, accessed, filtered, and analyzed. As its name indicates, this data has some structure, like being organized in a table. Structured data can be stored in a database, in different formats such as CSV, JSON, and more. It can also be found in a data lake and in real-time feeds such as Kafka messages, which involve sending items such as a telemetry message from IoT devices to a system.

Unstructured data is a much larger volume of data. According to multiple analyst estimates, up to 90% of the data generated today is unstructured. This data includes PDFs, texts, Word documents, images, photos, data on the web, HTML data, video data such as Zoom recordings and messages, meeting transcripts, social media, and other data that’s being posted around the clock.

Unlocking insights from unstructured data

Organizations use descriptive and predictive AI or analytics approaches to unlock insights from structured data. But unstructured data such as text, images, and audio can’t be directly processed by traditional analytics methods.

This is where generative AI plays a crucial role. Generative AI uses technology such as LLMs to work directly with unstructured data to train models and generate data, images, text, and more. Generative AI also enables organizations to extract structured features and insights from unstructured data – a process known as feature engineering.

By using LLMs, generative AI can do things like identify sentiments, detect profanity, and extract other structured information from unstructured sources such as documents or social media. This ability to transform unstructured data into structured features enables descriptive and predictive AI and analytics to accomplish more and operate on a wider range of data sources.

Ultimately, in order to unlock the insights and potential of your entire data landscape, including your unstructured data, an integrated approach combining generative AI, descriptive analytics, and predictive modeling is essential. By seamlessly integrating these three AI disciplines, you can unlock the true value that is within your diverse data assets, regardless of their structure or format.

Revolutionizing real-time analytics and AI workloads with Postgres

The journey toward achieving seamless real-time analytics is fraught with hurdles, primarily due to the fragmented nature of data across various systems and platforms. Robust, scalable, and versatile database solutions are needed for successful real-time analytics and AI workloads, and that’s why Postgres is emerging as a database of choice.

Known for its reliability and rich feature set that caters to complex analytical needs and supports advanced indexing, partitioning, query optimization techniques, and seamless data integration with innovative tools such as Kafka, FDW, logical replication, and more, Postgres provides a solid foundation for building real-time analytical platforms.

By combining Postgres’ advanced analytical features with pgvector’s vector data capabilities, organizations can implement machine learning models directly within their database, facilitating seamless storing and querying of data, overcoming limitations posed by data silos, and enabling a unified, data-driven approach to decision-making.

Postgres’ versatility extends to its language support, making it ideal for those who work with a variety of programming languages, including Python, RUST, and R. This flexibility ensures that AI models, regardless of the language they are written in, can be easily integrated and executed within the Postgres ecosystem. Data scientists and AI/ML programmers can quickly build their ML/LLM model with Postgres’ advanced features and functionality as a database store.

The importance of real-time analytics and AI in driving business success cannot be overstated. With its continuous innovation and community-driven enhancements, EDB and Postgres stand ready to support organizations in their journey towards a more integrated, intelligent, and responsive data landscape.

Watch the AI Overview video featuring EDB Chief Architect for Analytics and AI, Torsten Steinbach

Read the white paper: Intelligent Data: Unleashing AI with PostgreSQL