PG Phriday: Replication Revue

Database replication is an essential ingredient to successfully building a High Availability Postgres cluster. Believe it or not, that wasn’t something the Postgres community has always had. What phases has Postgres replication passed through over the years, and why does it matter?

This won’t just be an exploration into the origins of Postgres replication types, but an exercise in cluster building with each major iteration. The goal in the end is to project where we’re going based on where we’ve been.

In the beginning, there was WAL shipping

As we implied in the introduction, Postgres hasn’t always had easy access to database replication. Prior to Postgres 9.0 in fact, there was no replication of any kind. While it’s true that trigger-based replication existed before then, we’re going to restrict these discussions to built-in functionality only. We want to examine how well Postgres operates as a cluster on its own, not as a component of something else.

So how did Postgres users have any more than a single database node in those days if not through replication? Postgres writes all changes to the database to its Write Ahead Log (WAL) files prior to making the changes to the backend data files. In the event of a crash, Postgres crash recovery will apply any pending changes from the WAL.

An innovative use of these WAL files would be to simply archive them for reuse, and that’s exactly how it works. It’s the reason for the WAL archival system that we still use today. Simply set these parameters in postgresql.conf, and watch the magic:

wal_level = archive

archive_mode = on

archive_command = 'cp -n %f /path/to/archive/%f'

Replica systems required a few extra parameters in a recovery.conf file so they acted like any previously crashed Postgres node:

restore_command = 'cp /path/to/archive/%f %p'

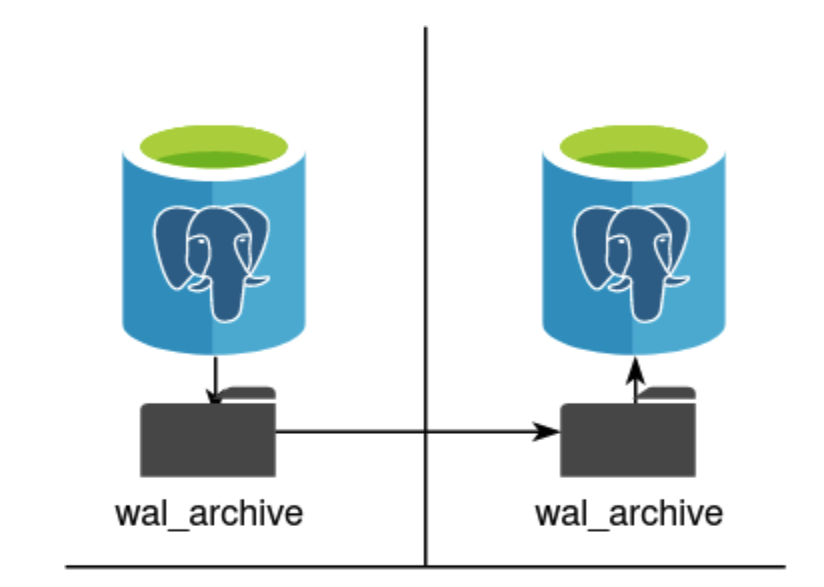

Back then, a two node disaster-recovery cluster would look something like this:

More advanced versions of this might include transmitting to a 3rd-party storage location, directly to the Disaster Recovery Postgres server itself, or other variants. The important part was that the Postgres nodes themselves were never directly connected. Neither node knew the other existed, and the only differentiating factor was how WAL files themselves were managed between dependent servers.

The primary downside for this approach is that WAL files start at 16MB each, and may represent thousands of ongoing and past transaction writes. Marshaling these files properly is never a trivial exercise even for mere backup uses, and having to manage their distribution among multiple replicas is error-prone at best. It is still common for WAL distribution to temporarily stop transmitting files, which amplifies data loss potential in systems that rely on constant file movement.

Even in a best case scenario, one file will always be missing in the case of an upstream Primary failure, with all of the unpleasant associated implications.

Introducing Physical Streaming Replication

Postgres 9.0 brought the advent of Physical Streaming Replication (PSR). It was finally possible to directly link two Postgres nodes such that a replica directly consumed WAL traffic from the upstream Primary. What’s more, the newly introduced Hot Standby mode made it possible to actually execute queries on the replica, which was not possible before.

This still leveraged the continuous crash recovery mechanism, but rather than full 16MB WAL segments, a replica could apply changes as they occurred. The older WAL archival system still has its place for backup purposes, but is no longer necessary to maintain replica nodes. Along with the new functionality came syntax that is probably familiar to some readers.

Primary nodes required these settings:

wal_level = hot_standby

max_wal_senders = 1

Standby systems at the time required these two values in their recovery.conf file, where the recovery system origins become more evident:

standby_mode = 'on'

primary_conninfo = 'host=primary-node …'

And finally to get the most out of this, Standby systems also needed one more addition to their postgresql.conf:

hot_standby = 'on'

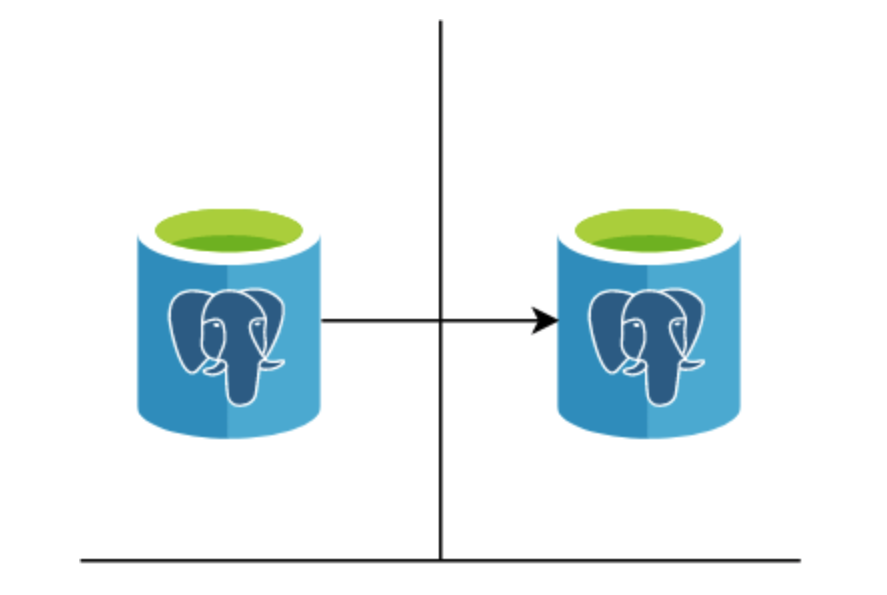

PSR also greatly simplified High Availability stacks of all description, leaving us this more reasonable relationship:

This particular featureset underwent multiple enhancements over the following years, with many important milestones.

Version 9.1

- Synchronous replication! This allowed administrators to specify durability guarantees for committed transactions among designated replicas.

- The pg_stat_replication view on the Primary node, which reflects the downstream status of replica systems. Previously it was necessary to use pg_stat_activity where replicas were mingled with other unrelated client session activity.

Version 9.2

- Cascading replication, allowing replicas to stream from other replicas.

Version 9.4

- Replication slots (pg_replication_slots), making it possible to track replica nodes even while offline, and allowing nodes to catch up following downtime.

Version 9.6

- N-Safe synchronous replication, so multiple replicas could act as synchronous standby systems. This is the closest thing Postgres has to direct quorum support.

In many ways, 9.1 was the first version of Postgres that could be loosely referred to as a “cluster” since replicas were both tracked, and capable of being synchronous with the Primary node. Subsequent releases got us closer and closer to true cluster operation, finally ending with 9.6 where multiple nodes could validate synchronous writes. This isn’t quite a voting quorum agreeing on cluster contents, but it’s the closest thing we’ve got at the moment.

It’s the node tracking, both in pg_stat_replication and pg_replication_slots however, that’s truly interesting at a cluster level. In order to truly have a cluster of nodes, there must be some persistent record of the members and roles of each. Let’s keep this in mind for later.

It’s perfectly Logical, my dear Watson

As the previous section noted, Postgres introduced replication slots in version 9.4. This release is also when Postgres added logical decoding, and this is no coincidence. Logical decoding makes it possible to replicate a subset of tables in a single database rather than the entire Postgres instance, but it also necessitates closer tracking.

Logical decoding is not really “replication” in the strictest sense, but an extract of WAL contents to their data representation equivalent. If we insert a “(5, 5)” tuple into a table named “foo” in some arbitrary transaction number, that’s the actual information transmitted over the replication channel.

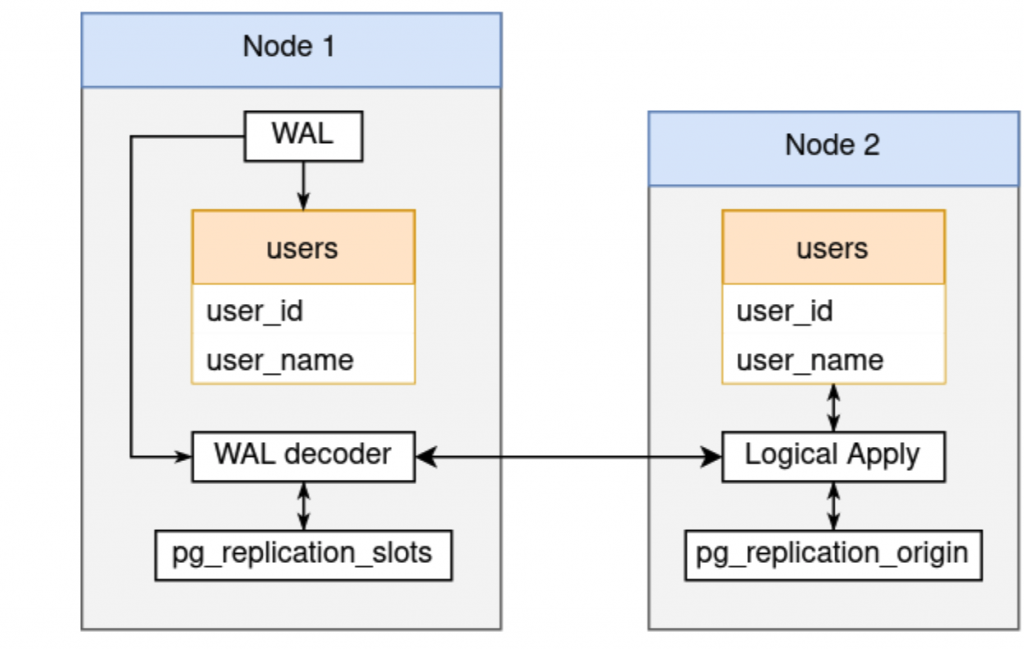

This is the real reason for the pg_replication_slots catalog table; it allows WAL decoding to continue processing from wherever it stopped. That this is also convenient for streaming replication is almost incidental. It’s also only half of the whole picture necessary to build an operational logical replication relationship.

The subscribing node must track that it has already processed a specific transaction from a particular origin server, and must do so for every upstream channel it consumes. Postgres 9.5 brought this exact capability with the pg_replication_origin and pg_replication_origin_status catalog tables. But note that Postgres still didn’t have native logical replication support until version 10. We can see the direction it was going by 9.5, but it took a couple more release iterations to reach the intended result.

Regardless, the combination of upstream and downstream tracking ends up looking something like this:

The WAL Decoder and Logical Apply workers both track their progress in a local catalog table, allowing each node to track the long-term presence and status of the other. To an extent, anyway. The Provider only really knows whether or not the replication slot is being consumed, and subscribers only understand that they’ve applied a certain amount of data from an origin with a particular name.

Still, it’s this enhanced tracking framework that makes this relevant to a discussion on High Availability. Logical replication got us to this point, but it’s possible to go further.

Bringing it All Together

So where do we go from here? In many ways, it seems like all of the requisite parts are there, or are close enough that subsequent steps emerge naturally. The primary downfall of the current physical and logical tracking structures in the current version of Postgres, is that there’s no cohesive whole.

If we check pg_stat_replication, we only have immediate visibility of replicas currently streaming from that node. We can further track previously connected nodes provided they were using replication slots, but only their last known WAL decode and apply positions, and also without the server name or alias or even the application_name field of the subscriber.

Even following Postgres 10’s official addition of native logical replication, publisher nodes do not track subscribers. Only subscriber nodes know which publishers they’re subscribed to through the pg_subscription catalog. To understand the cohesive state of the cluster, we must obtain this information from every single node and stitch it together based on the node and connection status in each direction for every relationship, leveraging a plethora of loosely-coupled system catalogs.

It’s the only way to know that replication slot A belongs to server B, which is currently disconnected due to maintenance, but will return shortly. It’s the only way to see cascaded relationships at all, in fact. It’s clear that the origins of Postgres replication as a crash recovery mechanism still predominate. It would take an extremely sophisticated tool to represent, reflect, or manipulate a full Postgres cluster, so it’s rare for users to attempt.

What do we need to take the next step?

- A central registry of some kind where every node records itself.

- A catalog of all subscription relationships, including replication type.

- Connectivity, status, positions, and related metadata for the above.

This would allow any node to see how many subscribers it had, how many origins it was consuming, whether there were any nodes several hops away that may depend on its data, and so on. This would also greatly simplify monitoring, as generating a directed graph and associated lag heat map is possible with one or two queries on a single node rather than every node in the cluster. Eventually an extension or even Postgres itself could expose libraries or APIs to see the cluster “at a glance”.

It’s a short hop from viewing the cluster to manipulating it. For a High Availability system to function properly, it must know the full state of the entire node constellation at all times. This is especially true when subtle relationships including partial logical subscriptions are involved. Remember, Postgres still has no ability to maintain replication slots through a failover event, so the HA system must do it instead. Sometimes this means manually recreating slots and advancing them to the correct location if the downstream consumer isn’t a Postgres node. Logical slots are intended for WAL decoding after all, so wal2json or a custom consumer could be involved.

These tracking structures also facilitate bidirectional replication as well. In fact, this is one of the ways BDR, EDB’s bidirectional Postgres extension, maintains two-way replication. In such clusters, it’s imperative to have the full picture, including downstream status, for every node relationship in the cluster. Beyond simple node intent and type, It determines which nodes are recoverable, which should be removed to preserve the health of the rest, cohesive cluster health at both a global and granular level, and so on.

This may or may not also require a consensus model to safely propagate such an aggregated catalog across the cluster, but it’s the missing piece Postgres needs to be cluster aware. Until then, Postgres provides all of the necessary tools at this point; all that remains is combining them. Unfortunately for now, that’s left as an exercise for the reader.