During the last October’s Italian PGDay and European PostgreSQL conference, my friend Marco Nenciarini and I had the pleasure to talk about a new open source plugin for PostgreSQL, called redislog.

In that presentation (“Integrating PostgreSQL with Logstash for real-time monitoring”) we provided an example of our exploration/experimentation approach, with extensive and thorough coverage of testing and benchmarking activities. If you are curious to know more about that process, please refer to the slides of that talk, which are publicly available on Prezi.

For the impatient: redislog taps into PostgreSQL’s logging facility and allows DBAs to ship log events into a Redis queue, directly in JSON format, and to enter the ELK stack through the first class lane.

Devops and the importance of Integration for PostgreSQL

One of the most strategic challenges that PostgreSQL faces today is integration with other technologies in large, complex and distributed systems, either virtual or physical. Therefore, it becomes crucial to effectively manage and monitor these environments. Log management is consequently an important aspect to take into account.

As far as PostgreSQL is concerned, a classic approach has always been to keep PostgreSQL logs separate and analyse them accordingly.

A devops-oriented approach, on the other hand, is to stream PostgreSQL logs into a unique source where all logs from other components are continuously gathered.

Writing redislog is our first attempt to improve the capability of PostgreSQL for log management in a devops environment.

Even though the initial scenario for this application (and the one followed in the presentation) involves the use of Elasticsearch, Logstash and Kibana, known as the “ELK stack“, for log management and real-time monitoring, we do not see any reason why redislog shouldn’t work with other tools – including your own.

The “redislog” extension

Typically, you can have Logstash read your text log files automatically produced by PostgreSQL, then store the contained entries in ElasticSearch and finally use Kibana for data exploration.

What we wanted to do was to exploit the emit log hooks infrastructure that PostgreSQL provides (see elog.c) and create a small prototype that, as an extension, enables to ship log events as JSON objects directly in Redis.

This approach would bypass writing logs on disk, as well as reading and parsing them with Logstash.

That’s how the idea of redislog was conceived.

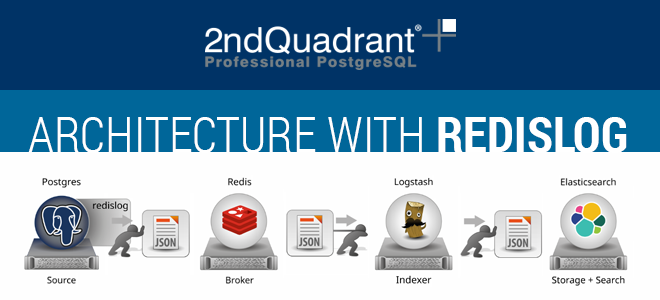

The redislog pipeline for PostgreSQL log management is made up of:

- PostgreSQL, the source

- redislog, the shipper

- Redis, the broker

- Logstash, the indexer

- ElasticSearch, the storage and search facility

- Kibana, the data visualisation platform

PostgreSQL generates events that are intercepted by redislog, then transformed into JSON objects and shipped to a distributed queue in a pool of Redis servers. Logstash reads from the pool of Redis servers and loads the events in ElasticSearch, allowing users to search them via Kibana.

The main features of the current version of redislog (0.2) are:

- Multiple Redis servers (pool)

- Shuffling of Redis hosts for load balancing of the events

- Customisation of JSON log fields (selection and naming)

- Log level filtering

- Disable local logging in case of successful delivery to Redis

- Connection timeout

Some of the items in our TODO list are:

- Shipping optimisation – that is, introduce a pooler of connections to Redis and reuse them amongst Postgres’ backends

- Add CSV output

- Support fixed fields specification

- Support Redis’ channel data type

Installing and configuring redislog for your PostgreSQL is quite straightforward. As any other PostgreSQL extension, it requires to be compiled and deployed with:

make && make install

Then, add in postgresql.conf something like follow:

# Load shared libraries for redislog (mandatory)

shared_preload_libraries = 'redislog'

# Define the Redis pool (at least one server). In this case, we have

# four Redis servers (3 listening on default port 6379, one on 6378)

redislog.hosts = 'redis1, redis2, redis3:6378, redis4'

# Activate load balancing

redislog.shuffle_hosts = on

# Skip local logging

redislog.ship_to_redis_only = on

# Define minimal log level

redislog.min_messages = info

Further information on the available options for redislog is available on the website.

Conclusions

During our presentation at PGConf.eu and PGDay.IT we took time to show the experimentation process that led to the development of redislog.

While the whole story is detailed in the slides of the presentation, as far as this article is concerned, I would like to focus on the importance that continuous experimentation has for us at 2ndQuadrant.

As with any experiment, we were thrilled by the uncertainty of the outcomes and were not afraid of failure. Actually, we were trying to produce early failures, rather than being obsessed by making this extension work. After all, it is part of our devops culture to accept failures, as long as they are intelligent – that is, we are able to learn from them. Our benchmarking activity based on Docker containers granted us to prove that redislog improves the overall efficiency of a system, as well as reaching real-time monitoring without losing any data.

It is important to note that redislog might not be suitable for every use case scenarios, as it obviously places more work on PostgreSQL shoulders. However, especially if you have a heterogeneous system, this approach allows PostgreSQL to integrate itself in a devops monitoring platform very easily. As usual, before using it in production, we encourage users to extensively test redislog in a staging environment.

I personally feel that monitoring is still an area where PostgreSQL should do much better, and in such a context, my view is that integration with other tools like Redis or any AMQP system, can play an important role.

Finally, if you are interested in helping 2ndQuadrant with our research activity in the open source area of PostgreSQL monitoring, please feel free to contact us.